As I’m currently in the process of creating marketing materials for an exciting new project, the ability to generate and then subtly tweak visual assets is paramount. The foundation for this, however, is reliable image replication. With this in mind, I’ve been putting Black Forest Labs’ new FLUX.1 Kontext through its paces, comparing it directly with an established OpenAI model. My initial focus: How well do these models replicate an existing image, especially concerning aspect ratio, content fidelity, and the fine details I’ll later need to adjust?

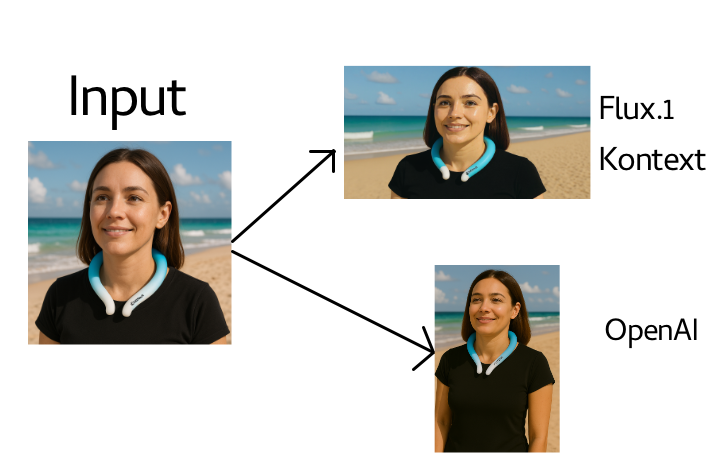

I’ll be sharing a comparison image directly below this post so you can see the outputs side-by-side. Here are my key observations from this test:

- Aspect Ratio Adaptations: Neither model perfectly preserved the original aspect ratio of the input image. As you’ll see in the comparison, FLUX.1 Kontext opted for a wider, landscape-style output, while OpenAI’s model produced a taller, portrait version. This is a critical takeaway: if strict format replication is key, specific prompting or model settings (where available) will be essential.

- Content & Detail Fidelity:

- In the provided example, both models reasonably maintained the main subject and general beach setting.

- However, during my broader testing, FLUX.1 Kontext did, on occasion, introduce unexpected content alterations not present in the prompt or source (for instance, in one test, a hand appeared in front of the subject’s face).

- Consistent with previous observations, both models still exhibit challenges with rendering fine details crisply, such as precise lettering or logos (which you might notice on the neck device in the comparison image). This is particularly relevant for marketing materials where brand elements must be accurate.

Persistent Challenges: Beyond visual output, it’s worth noting that both models occasionally (and inexplicably) refused image generation due to content moderation, even with entirely innocuous subjects. This remains a practical hurdle for reliable, scaled deployment.

The Upside – Testability: A significant plus is the new BFL Playground from Black Forest Labs. It offers a very accessible and user-friendly environment to directly test and evaluate FLUX.1 Kontext’s capabilities without deep technical integration, which I highly recommend, especially if you have similar use cases. https://playground.bfl.ai/image/edit

My Preliminary Conclusion: This initial comparison underscores that the “best” model is highly use-case dependent. For tasks like creating marketing collateral, where initial replication accuracy sets the stage for further detailed editing, these nuances are crucial. Factors like desired output format, tolerance for creative deviation versus strict replication, and the nuances of detail rendering all play a role. For AI leaders, it highlights the ongoing need for granular evaluation against specific organizational requirements. The journey with generative AI is one of continuous testing and adaptation.

I’m keen to continue exploring FLUX.1 Kontext, especially its in-context editing features, which are directly applicable to my project needs.

What are your initial impressions or test results with the latest image generation models, particularly for marketing or content creation? I’d love to hear your insights!

#AI #ArtificialIntelligence #ImageGeneration #FLUX1Kontext #OpenAI #BlackForestLabs #AILeadership #TechInnovation #HandsOnTest #GenerativeAI #BFLPlayground #DigitalTransformation #Marketing #ContentCreation